“Once, men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them.”

Opinions about it here on Lemmygrad are quite… divided from what I’ve seen. Really the only thing I’ve seen this community split 50/50 on.

I don’t like it. (For clarity, I will define AI as neural-network programs that use pattern-seeking to create output.) It uses way more power than it is good for, and altho I sometimes put climate change on the backburner due to necessity, it emits lots. It does not build things like houses and airplanes, its advice cannot help people fix things, and despite the talk of people I’ve found saying “it will get better” it has not done so proportional to the resources put in. Despite most image-generation programs creating incredibly realistic forged images, they have no use in actually making anything valuable, nor in recording things. It is not like carbon dating, the steam engine, or the soda can. It just eats electric power and shits out lies.

I think any issues with the “ethics” of AI image generation really are critiques of our current mode of production, I.e. how people currently use AI to displace jobs. My personal opinion is that some of it honestly looks kinda shit. Although if I can’t tell, great, by all means use it responsibly.

Training these models is a horrible waste of resources. They aren’t nearly good enough to justify the resources that are consumed.

A good case study that even otherwise principled and clear-minded communist-sympathizing people can forget there’s a difference between “I hate capitalism” and “I hate [the form of thing when it is distorted by capitalism]”. And that when we are immersed in capitalism, it’s easy to assume a thing is inherently a thing because it’s what we’re used to. Rather than it being a thing in a form unique to capitalist dictatorship. If that’s overly vague, then good in this case. I want people to think about it beyond the immediate reaction.

I wanted to highlight one practical use of generative AI. There’s a Russian Marxist-Leninist channel called “Думай Сам / Думай Сейчас” (“Think for Yourself / Think Now”), which in my opinion produces some of the highest-quality communist video content on YouTube.

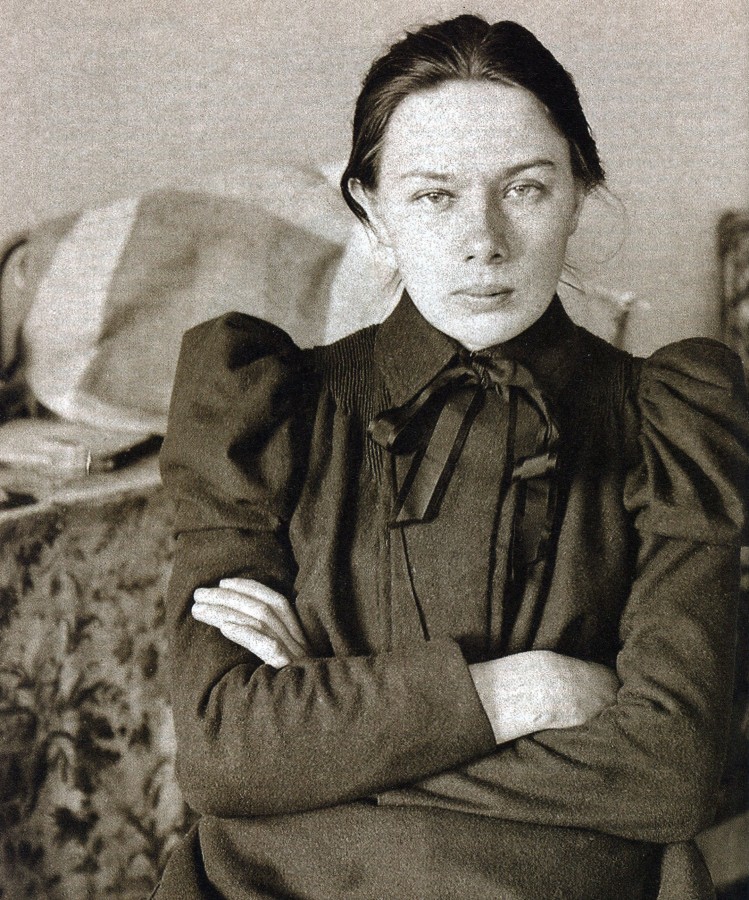

I think they make partial use of AI to generate images for community posts or shorts, here for example:

They also use AI for showing old historical photos with some movement, like people walking or moving their hands, to keep their videos dynamic. I don’t think it’s a bad thing since it reduces the workload and adds aesthetic value. And if I have to be honest, I didn’t even notice at first that they were using it, and they still do a lot of manual work, between scripting, animating images manually, editing and doing 3D modeling and animation.

Also remember that anti-communists/reactionaries (eg. PragerU) get millions of dollar of budget and thus they can produce their shit at industrial quantities. We, on the other hand, have to be resourceful with what tools are available.

That being said, AI like everything else is weaponized under capitalism, but I think we all know that.

And I know some people usually lament about AI looking bad or removing creativity, and while I disagree with that, here I’m talking about how it can lighten labor for agitprop, not to substitue creativity or fire people or whatever. Not everyone can learn perspective, anatomy, coloring, shading, etc. just to post agitprop that looks cool online. Again, this from an agitprop point of view.

Food for thought food for thought

Thank you for this recommendation! (The Real Lenin was a pleasure to watch)

Anytime, comrade! :)

Do you have links to the channel?

Sure! Here: https://www.youtube.com/@ThinkYourSelf_ThinkNow

Pollutant wastes producing slop that steals from artists to feed the profit of fascists.

Western AI is a con.

“AI” image and video generation is soulless, ugly, and worthless. It isn’t art; it is divorced from the human experience. It is incredibly harmful to the environment. It is used to displace art & artists and replace them with garbage filler content that sucks even more of the joy from this world. Just incredibly wasteful and aesthetically insulting.

I think these critiques apply to “GenAI” more broadly, too. LLMs in particular are hot garbage. They are unreliable but with no easy way to verify what is or isn’t accurate, so people fully buy into misinformation created by these things. They also get treated as a source of truth or authority, meanwhile the types of responses you get are literally tailor made to suit the needs of the organization doing the training by their training data set, input and activation functions, and the type of reinforcement learning they performed. This leads to people treating output from an LLM as authoritative truth, while it is just parroting the biases of the human text in its training data. The can’t do anything truly novel; they remix and add error to their training data in statistically nice ways. Not to mention they steal the labor of the working class in an attempt to mimic and replace it (poorly), they vacuum up private user data at unprecedented rates, and they are destroying the environment at every step in the process. To top it all off, people are cognitively offloading to these the same way they did for reliable tech in the past, but due to hallucinations and general unreliability those doing this are actively becoming less intelligent.

My closing thought is that “GenAI” is a massive bubble waiting to burst, and this tech won’t be going anywhere but it won’t be nearly as accessible after that happens. Companies right now are dumping tens or hundreds of billions a year into training and inferencing these models, but with annual revenues in the hundreds of millions for these sectors. It’s entirely unsustainable, and they’re all just racing to bleed the next guy white so they can be the last one standing to collect all the (potential future) profits. The cost of tokens for an LLM are rising, despite the marketing teams claiming the opposite when they put old models on steep discount while raising prices on the new ones. The number of tokens needed per prompt are also going up drastically with the “thinking”/“reasoning” approach that’s become popular. Training costs are rising with diminishing returns due to lack of new data and poor quality generated data getting fed back in (risking model collapse). The costs will only go up more and more quickly, and with nothing to show for it. All of this for something which you’re going to need to review and edit anyway to ensure any standard of accuracy, so you may as well have just done the work yourself and been better off financially and mentally.

I’m not fully against it but I need to know it’s AI and I prefer real images

I know there’s been a lot of debate here on it, but personally, no matter the ethical issues, honestly they just look bad 90% of the time.

And that 10% where they do look passable probably came after a ton of prompting or manual editing. I feel like at a certain point it’s probably better to just learn how to draw.

The only time I genuinely get angry is when the ai images are made in the studio Ghibli style. Because they look even worse just because of uncanny valley. I’m not a pro-copyright person but if we could get rid of that style from image generation services I think it would actually make ai images better

deleted by creator

Butlerian Jihad. Now.

Some nuanced takes from someone who is both a consumer and a researcher.

Personally, if somebody I follow posts one it usually smacks of laziness, the degree of acceptability of which can depend on the purpose of the image.

If the intent is artistic, unless it’s something really good (or often a commentary on AI art itself), it looks like bland regurgitated crap.

If the objective is political, more often than not it feels like the person thought up some bland idea and didn’t think it through enough.

If it’s for marketing, specially from small businesses who couldn’t afford actual designers, I find it often does the job well enough.

Socially, we live under developed capitalism, and so, every single human product is commodified. Value is imbued on a commodity by human labour socially required to produce it. “AI art” doesn’t just have lower labour value, it actually reduces the socially required labour for the production of digital images, which in turn depreciates their value even for human producers.

Human-made images can only remain competive by differentiation from “AI”-ones, which, although is already happening very naturally, is no easy task for beginners. This puts pressure on artists, photographers and such, who are not big fish on a market intentionally flooded with “AI art” by the internet companies who control that market and hold a massive stake in “AI” investment and adoption.

And finally, scientifically, “Artificial Neural Networks” are fantastic interpolators, but terrible extrapolators. This permeates the entire field of DNN-based “AI”, which is why some sort of extrapolation is always at the edge of “AI” futurology stock-hype, often solved by just another layer of interpolation rather than breaking the mathematical limits of DNNs.

Yesterday’s “MLP learned XOR” is today’s “GPT learned to count”, but at the end of the day most hopes built into “AI” are fundamentally flawed, and most advances are born out of a lot of good work on interpolation being masked as “another step towards AGI”.